I’m more afraid of the flood of AI Generated CSAM and the countless people affected by non-consensual porn.

Careful with that thinking… I just called a pedo for that.

AI isn’t on track to displace millions of jobs. Most of the automation we’re seeing was already possible with existing technology but AI is being slapped on as a buzzword to sell it to the press/executives.

The trend is going the way of NFTs/Blockchain where the revolutionary “everything is going to be changed” theatrical rhetoric meets reality, where it might complement existing technologies but otherwise isn’t that useful on its own.

In programming, we went from “AI will replace everyone!!!” to “AI is a complementary tool for programmers but requires too much handholding to completely replace a trained and educated software engineer when maintaining and expanding software systems”. The same will follow for other industries, too.

Not to say it’s not troubling, but as long as capitalism and wage labour exists, fundamentally we cannot even imagine a technology to totally negate human labour, because without human labour the system would have to contemplate negating fundamental pillars of capital accumulation.

We fundamentally don’t have the language to describe systems that can holistically automate human labour.

…and no remote work! Office real estate will loose it’s value banks become sad.

Am I OOL on something or are we calling CSAM and nonconsentual porn “erotic roleplay” now?

Nobody was talking about either of those things until you came along. Why are they on your mind? Why do you immediately think of children being molested when you hear the word erotic?

I don’t. Again, the only complaints I’ve heard about AI porn are regarding those 2 things. If you have other examples, please share.

They clearly said erotic roleplay, you instantly thought about child porn. Simple as that. This was not a post about child porn. That is whats on your mind.

When someone mentions AI and porn together, your right…that’s where my mind goes because it’s an important issue and…again, the only thing I’ve heard any actual ethicists complain about. Why is your response to try and insinuate I’m a pedophile instead of addressing that I actually said?

Because, again, this was a post about erotic roleplay and YOU ALONE tried to turn it into child porn. NOBODY was talking about that. you came along and said ‘are we calling child porn erotic roleplay now?’

Please, for the love of god, stop bringing up child porn when nobody else was discussing it. That is not what this post was about.

They also specifically mentioned ethicists bitching about it. I haven’t even thought about sexting since the 90s…it literally never crossed my mind that’s what they were talking about. I follow AI, and I follow a lot of ethicists and the ONLY overlap there in my experience is around those two topics. Maybe try asking the LLM for a definition of psychological association between fap sessions instead of throwing that Freudian shit at me…last time anyone believed that they needed a quill and a stamp to sext.

I give up dude.

Are you not capable of conceiving an AI-driven erotica roleplay that wouldn’t be classified as CSAM?

See my other reply…it’s not that, it’s that the only ethical concerns I’ve heard raised were about CSAM or using nonconsenting models.

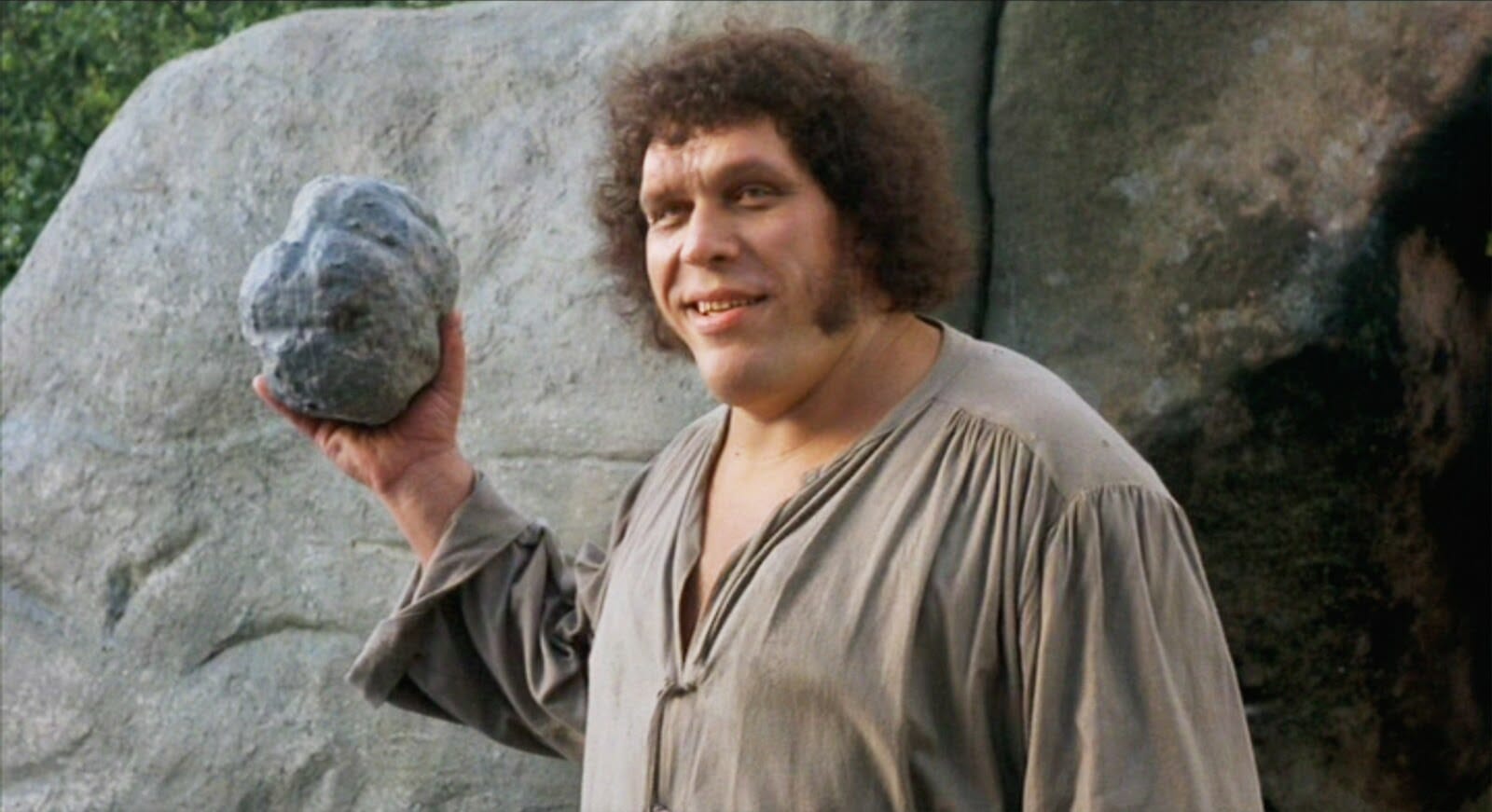

The “ethics” of most AIs I have futzed with would prevent them from complying with a request to

act as a horny catgirlbut not to worry about agreeing towrite an inspiring 1000 word essay about Celine Dion at a grade 7 reading level. My reading of the meme is that it’s about that sort of paradox.

The Big fear that a lot of people have with AI isn’t the technology itself moreseo the fact that its advancements are likely to lead to a even more disproportionate distribution of wealth

Capitalism is working as intended. Support ticket closed.

If it didn’t they would be legislating against it.

Displacing millions of workers

I have seen what AI outputs at an industrial scale, and I invite you to replace me with it while I sit back and laugh.

It’s not about entirely replacing people. It’s about reducing the number of people you hire in a specific role because each of those people can do more using AI. Which would still displace millions of people as companies get rid of the lowest performing of their workers to make their bottom line better.

It’s not about entirely replacing people

Tell yourself that all you wish. Then maybe go see this thread about Spotify laying off 1500 people and having a bit of a rough go with it. If they could they would try to replace every salaried/contracted human with AI.

Yeah I’m not arguing that replacing people isnt what they want to do. They absolutely could if they would. I was just responding to the person saying they can’t be replaced cause AI can’t do what they do perfectly yet. My point was that at least for now it’s not entirely replacing people but still displacing lots of people as AI is making people able to do more work.

AI is making people able to do more work.

It’s not. AI is creating more work, more noise in the system, and more costs for people who can’t afford to mitigate the spam it generates.

The real value add in AI is the same as shrinkflation. You dump your clients into paying more for less, by insisting work is getting done that isn’t.

This holds up so long as the clients never get wise to the con. But as the quality of output declines, it impacts delivery of service.

Spotify is already struggling to deliver services to it’s existing user base. It’s losing advertisers. And now it will have fewer people to keep the ship afloat.

I think that’s a really broad statement. Sure there are some industries where AI hurts more then it helps in terms of quality. And of course examples of companies trying to push it too far and getting burned for it like Spotify. But in many others a competent person using AI (someone who could do all the work without AI assistance, just slower) will be much more efficient and get things done much faster as they can outsource certain parts of their job to AI. That increased efficiency is then used to cut jobs and create more profit for the companies.

Sure there are some industries where AI hurts more then it helps in terms of quality.

In all seriousness, where has the LLM tech improved business workflow? Because if you know something I don’t, I’d be curious to hear it.

But in many others a competent person using AI (someone who could do all the work without AI assistance, just slower) will be much more efficient and get things done much faster as they can outsource certain parts of their job to AI.

What I have seen modern AI deliver in practice is functionally no different than what a good Google query would have yielded five years ago. A great deal of the value-add of AI in my own life has come as a stand-in for the deterioration of internet search and archive services. And because its black-boxed behind a chat interface, I can’t even tell if the information is reliable. Not in the way I could when I was routed to a StackExchange page with a multi-post conversation about coding techniques or special circumstances or additional references.

AI look-up is mystifying the more traditional linked-post explanations I’ve relied on to verify what I was reading. There’s no citation, no alternative opinion, and often no clarity as to if the response even cleanly matches the query. Google’s Bard, for instance, keeps wanting to shoehorn MySQL and Postgres answers into questions about MSSQL coding patterns. OpenAI routinely hallucinates answers based on data gleaned from responses to different versions of a given coding suite.

Rather than giving a smoother front end to a well-organized Wikipedia-like back end of historical information, what we’ve received is a machine that sounds definitive regardless of the quality of the answer.

I work a shitty job that doesn’t care about me. I’ll likely have to do it the rest of my life. I really hope AI doesn’t take that away from me!

What are you saying? That people without jobs aren’t treated well?